5 Technology Trends for this Decade

Every new decade experiences more change than the previous one. Quite a bit happened between 2001 and 2011 and a lot more can be expected between 2011 and 2021. Some of what I’m about to present are actually likely to be quite conservative “predictions” as they are based on trends that are already well under way or have enormous potential that we are very unlikely to pass up, controversy and conflicts that may occur over them notwithstanding.

1. Predominantly Mobile Computing (or the “Post PC” era)

There has been a lot of talk about the death of PCs and the post PC era, as we observe a rather obvious and continuous rise in the uptake of mobile devices. I wouldn’t say desktop PC market will die though, but it will revert to a niche segment, while ultrabooks, tablets and smartphones effectively take over. The rise of mobile computing can be attributed to multiple factors:

There has been a lot of talk about the death of PCs and the post PC era, as we observe a rather obvious and continuous rise in the uptake of mobile devices. I wouldn’t say desktop PC market will die though, but it will revert to a niche segment, while ultrabooks, tablets and smartphones effectively take over. The rise of mobile computing can be attributed to multiple factors:

Rise in processing power

Processing power is a major factor that shapes these trends simply because it is about what you can actually do with any given device. Quite simply, more processing power can be packed into ever smaller devices, allowing you to do more and more with less and less. Modern day smartphones pack up as much power as some of the most powerful desktop PCs of 2001.

At this rate it is quite possible that by 2021 our smartphones (more like “superphones”) will be as powerful as modern PCs equipped with an i7 CPU and 4GB of RAM. You’ll probably be able to plug a smartphone into a dock to get a picture on a big screen and more comfortable input methods, and you’ll be able to do most of your computing tasks with it. Ditto for tablets, which due to their larger size can offer even more power.

Ultrabooks will be the stepping stone between the traditional notebooks and tablets, combining the convenient and familiar form factor and power of notebooks with the portability of tablets.

Ultrabooks are probably what will be left when the tablet finishes its ongoing cannibalization of the PC market, and will be the only popular devices to still run “traditional” operating systems such as Windows, Mac OS X and Linux. For many people these will be their workhorse machines, not desktop PCs, because they will have enough power even for such hungry tasks as music production, design, video editing and probably even some graphically intensive gaming.

So what will be left for desktop PCs? They’ll effectively going be relegated to a role of a home super-computer, for the few of us who may like such a luxury. Slimmed down variants may still be used as media servers (ala Boxxee Box, Roku or Apple TV), albeit game consoles or soon to emerge smart TVs could easily remove the need for those too.

A related trend is the increasing affordability of Solid State storage, which diminishes the need for mechanical hard drives and optical drives, which contributes to portability. Solid State drives will gradually replace hard drives while small flash drives known as “USB sticks” in combination with digital distribution replace CDs and DVDs.

Cloud Computing

Cloud Computing is still essentially about increasing the amount of processing power available to portable devices, only in a different way. Thanks to cloud computing a device doesn’t have to do all of its processing locally within the device itself. All it needs is a sufficient amount to run online applications and services.

An extreme example of this are Chromebooks which are meant to demonstrate just how much can be done today with sole reliance on cloud based applications and services accessed using a relatively cheap and underpowered netbook.

That said, I don’t think exclusive reliance on the cloud will persist as the way of the future. Instead, I think the cloud will predominantly play a complementary role. The best example of this may be using cloud services to seamlessly sync data between devices, which significantly helps the practicality of using mobile devices for all of our computing needs, but not all apps which we use to view and edit this data will necessarily come from the cloud. Too many people may prefer relying on something that sits on their own device instead of something that they could potentially lose access to when they need it (in case of connection problems or downtimes).

2. Pervasive Internet of Things + Augmented Reality

This trend is largely an outcome of the increased mobilization of mainstream computing, and related trends. With more processing power being available to ever smaller devices, and with the ever more pervasive availability of high speed internet access comes the possibility of connecting more and more devices which we previously didn’t really think about as worthy of an internet connection. The ultimate outcome of this is what is often referred to as “The Internet of Things” which in a nutshell implies the connectedness of everything that could in any way benefit from being connected.

For example, this could involve smart home appliances that you can control and survey with an app on your smartphone or a tablet, and RFID tags or QR codes on everything you buy. Through these tags food packaging could tell your fridge the expiration date and optimal temperatures, which would in turn transmit this information to your app. A closet may keep an inventory of everything you have in it. With everything digitally accounted for you could ultimately have a search engine for your home, or indeed your entire life, allowing you to at a glance know everything there is to know about what you have, where is it, and what state it may be in.

Outside of home this could involve roads which measure their traffic and make this data available online, and cars which use this and other data to plan optimal routes to a given destination. In fact, such information contributes to the feasibility of driverless cars, which may very well enter common use within this decade.

Companies could track the inventory in their storages or location of specific items in transport. Various scientific organizations could use sensors to measure all kinds of things that they could use for their research.

In other words as the ubiquity of internet access increases more things will be connected at more places, and as this trend continues more intelligence will become available to everyone about everything everywhere. This by extension should make us able to make smarter decisions about pretty much everything we do or want to do.

Of course, for this to work efficiently we need an efficient method of accessing all of this data when we need it, and where we need it. Various apps on smartphones and tablets are an obvious choice for this, but one thing that plays very well into the hands of this new Internet of Things is Augmented Reality, which literally overlays all of this data across physical objects and locations which this data describes.

As the usefulness of this data increases people will rely on it more, and as they rely on it more Augmented Reality will probably rise in attractiveness. Since holding up a smartphone or a tablet to see this Augmented Reality isn’t quite practical, I think this will quickly lead to wearable solutions such as AR glasses or retina projectors, and helmets for specialized uses, such as this firefighter helmet.

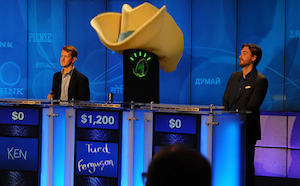

3. Artificial Intelligence Gets More Jobs

IBM Watson can wear many hats

With IBMs Watson expected to be used in hospitals and tech support, and bots increasingly being employed to write stories (not to mention certain marketing jobs not all of us like to appreciate), the trend of artificial intelligence literally getting jobs that could previously only be done by humans is already under way. In some cases the job being done by an AI couldn’t ever be done by a human alone, but it may still result in someone’s job being cut.

This trend is driven by the fact that AIs can typically do things better, or more cheaply and efficiently, or both. It’s also a logical extension of our already tremendous reliance on machine intelligence in the running of our world.

As the improvements in Artificial Intelligence are married to improvements made to robotics the new jobs taken by AIs may not be limited only to the information realm. If you’re regularly reading tech news you’ve probably heard a lot about drones being used for space exploration and in the military to do tasks that humans either can’t do at all, which are too dangerous, or which we can’t do as well as a robot can.

Due to demand for greater efficiency this trend can only continue unabated, and go further than a lot of us may expect it to go, something that I also explored in another article. While perhaps not within this decade, the long term result of this may be relegating humans to only creative works and works of inventing and discovering new things, until even that gets taken over by machines, but by then I think a lot of people will already begin to realize the need to augment our abilities in order to keep up and continue having any sense of purpose other than being pampered by our robots (if we’re lucky).

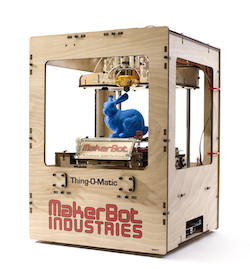

4. Rise of 3D Printing

3D printing isn’t a very new concept. Rapid prototyping machines have been available in industrial manufacturing for a long time, and 3D printers themselves can be viewed as just another type of an automated manufacturing robot. The difference is that this device can, without any special reconfiguration, create a huge variety of items from a given material (typically a form of plastic) by simply stacking layers on top of each other.

3D printing isn’t a very new concept. Rapid prototyping machines have been available in industrial manufacturing for a long time, and 3D printers themselves can be viewed as just another type of an automated manufacturing robot. The difference is that this device can, without any special reconfiguration, create a huge variety of items from a given material (typically a form of plastic) by simply stacking layers on top of each other.

What is new is the availability of affordable 3D printers to home users, starting with the likes of RepRap and Makerbot, which come in kits at a cost of about $1200. There are already thousands of users of these machines across the world, and while these are still mainly enthusiasts, they form the beginnings that remind of the enthusiasts that sparkled the computer revolution back in the 70s.

It should be only a matter of time before someone puts together a consumer-grade 3D printer with mass appeal and markets it to the general public. Given the tremendous usefulness of these machines their adoption is bound to explode if someone offers the right design at the right price. Speaking of usefulness, apparently objects that can be printed aren’t just limited to those made of plastic; even food can be printed!

As discussed in another article, this will give rise to a whole new breed of “intellectual property” issues as this technology gives people the ability to turn designs stored on a computer into real life objects that they can use. When you add 3D scanners into the mix a lot of the real world objects suddenly borrow some of the properties of digital data, in that they can be copied with ease just as digital content can.

This decade may see the rise of adoption of 3D printers and the beginnings of the IP battles that may result over its use, but I doubt this will ultimately stop the rise of its adoption any more than copyright wars stopped the rise of digital file sharing. As its adoption increases the technology will be improved as well, giving it greater speed and precision.

It may be interesting to note that the advances in nanotechnology may combine with the concept of a 3D printer to create what scifi fans know as “replicators” (from Star Trek) which are effectively 3D printers which build objects atom by atom instead of layer by layer of crude material. 3D printers may be seen as the very early versions of such future nano-assemblers.

5. Leaps in Human Augmentation

There are a whole of three ways, all of which already demonstrated as possible and even likely, of augmenting human beings to give us upgraded and new capabilities. This may be hardest to believe or accept simply because it seems the most radical, but evidence speaks for itself.

There are a whole of three ways, all of which already demonstrated as possible and even likely, of augmenting human beings to give us upgraded and new capabilities. This may be hardest to believe or accept simply because it seems the most radical, but evidence speaks for itself.

The whole point of technology in general is to allow humans to do what we couldn’t before or to do things better. This is why, despite initial controversies and gag reactions by certain groups with certain sensibilities, it will be very hard to stop Human Augmentation from becoming a serious trend, and I think it will be developing into such a trend within this decade. It is simply a logical extension of what we’ve been doing all along, except now we can empower ourselves directly, and not just through the use of external tools.

Genetic Engineering

Proliferation of genetically modified food (known as GMO foods) and the resulting controversy prove already existing proficiency in this field, but genetically modified food is just the beginning. Due to rapid scientific advancements the ability to modify or engineer humans before they are born (to create so called Designer Babies) is just around the corner with some already standing ready to offer such services as soon as they become possible. Human genetic modification may not be limited only to pre-birth modification though. It is possible to modify adults as well, which would display the newly programmed traits within weeks of genetic treatment.

A recent breakthrough in genomics, which involves the creation of life in a laboratory, demonstrates how far human understanding of genetics has come. Those in the field are talking about a (near) future in which it may be possible to create entirely new life forms by simply designing them in a computer, converting the digital code into genetic code and injecting it into a biomass only to watch it grow into what we’ve designed.

Unlocking our genetic code, understanding which of it applies to which traits, and developing the ability to change it gives us unprecedented possibilities of human augmentation at the most basic level.

Organ Printing

Combining the 3D printing technology mentioned above with the increased understanding of our biology gives us the ability to literally print replacement body parts. The 3D printer puts cells together to form real organs, and contrary to what some may assume, the resulting organs are actually much better than what may be transplanted from another person. The organs are printed to be an exact DNA match to the person for whom the organ is intended so that the body will accept such organs as its own, as if they were there from birth.

An already operational German skin factory is a testament to how down to Earth this possibility already is. Given advances being made so far it is easy to imagine being able to order replacement organs within this decade. This has significant life extension implications, as being able to replace faulty or failing organs is a sure-fire way of preventing deaths that were so far not preventable. Theoretically one could keep renewing his or her organs indefinitely.

Of course, possibilities for augmentation are there as well, as these organs could potentially be redesigned to possess certain beneficial traits.

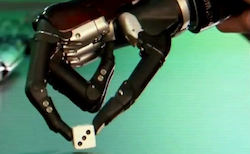

Advanced Prosthetics

The irony here is that it is the disabled people who are on the frontier of prosthetics-based human augmentation. Unlike those of us who aren’t missing any crucial components of our bodies they don’t have much to lose by agreeing to be augmented. If being given a prosthetic leg means a chance that you can walk like a normal person again the choice is a no brainer.

What’s been happening more and more, however, is that those with prosthetic legs can not only walk, but run, and run faster than normal people. As the prosthetic technologies are becoming more sophisticated they may actually end up lending greater capacities than are available with normal organic body parts, and possibly without a compromise. The key challenge isn’t so much in designing parts that faithfully emulate and advance upon the organic ones, but making them seamlessly interface with our brain and nervous system.

There is a good chance of great inroads being made to meet that challenge within this decade as our understanding of the brain and our nervous system is advancing rapidly, to a large extent for the purposes of artificial intelligence.

Combining all of these possibilities makes a pretty compelling case that human augmentation is a serious possibility that is already brewing into what could become an industry within this decade.

Comments - One Response to “5 Technology Trends for this Decade”

Sorry but comments are closed at this time.